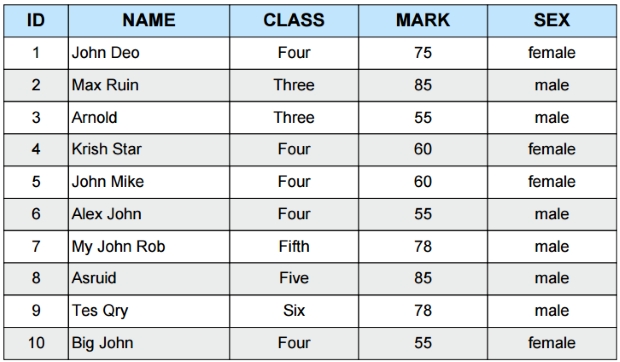

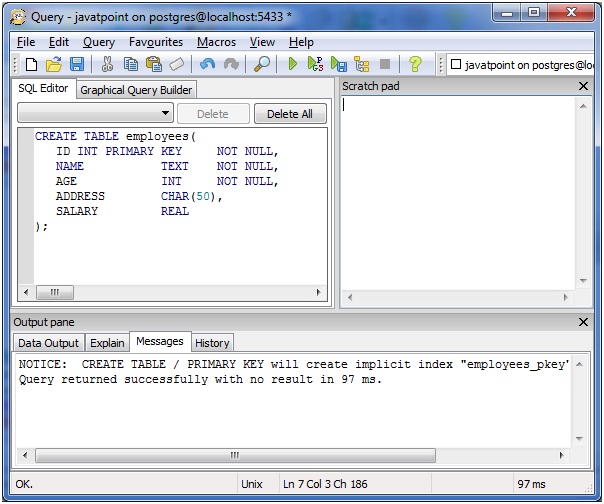

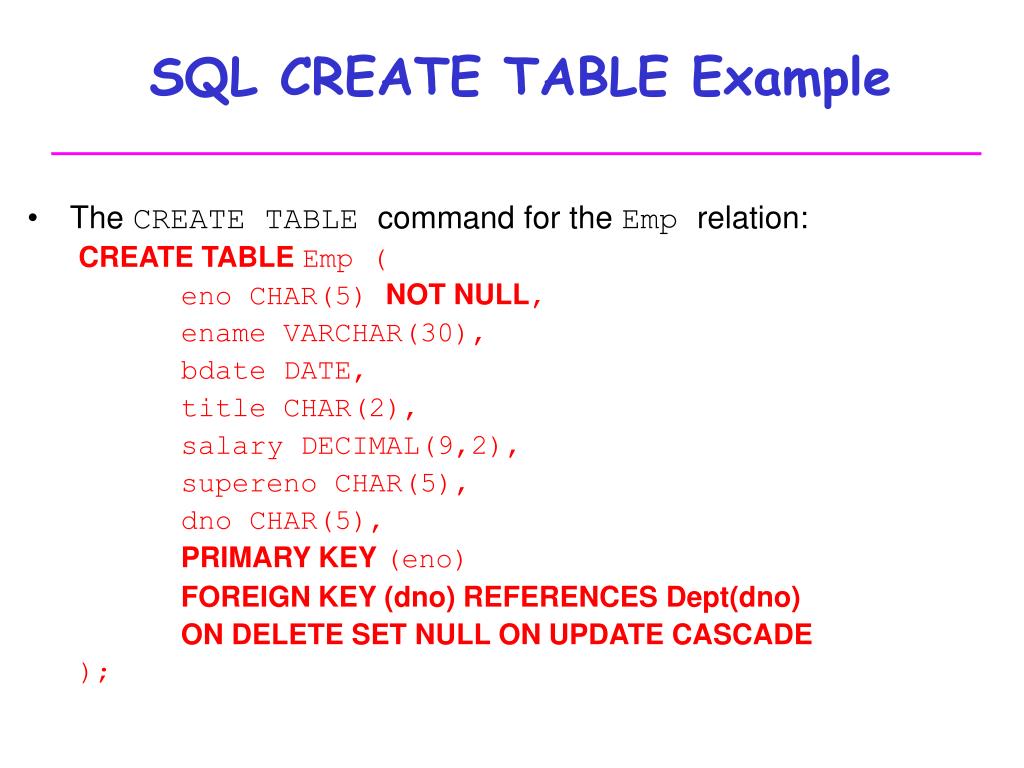

Therefore, a single user-defined table can define a maximum of 1024 columns.Īn SQL query to create a table must define the structure of a table. Including tables, views, indexes etc., a database cannot exceed 2,147,483,647 objects. However, a limit exists on the number of objects that can be present in a database. One can create any number of tables in an SQL Server database. To create a table in SQL, the CREATE TABLE statement is used. SQL provides various queries to interact with the data by creating tables, updating them, deleting them etc. Here, a field is a column defining the type of data to be stored in a table and record is a row containing actual data. These structures are nothing but simple tables containing data in the form of fields and records. This would help maintain idempotency of your DAG and prevent unintended side effects(which would have happened if we had used an INSERT).Īs always, please let me know if you have any questions or comments in the comment section below.SQL, in relational databases, is used to store the data in the form of some structures. The next time you are writing an ETL pipeline, consider how it will behave in case a backfill would need to be done. Also note that we have configured the write process to be an UPSERT and not an INSERT, since an INSERT would have introduced duplicate rows in the output. To bring pythonic capabilities to your SQL script.

#PGCLI CREATE TABLE EXAMPLE HOW TO#

Hope this article gives you a good idea of how to use Airflows execution_date to backfill a SQL script and how to leverage Airflow Macros Let’s say we want to change the processed text to add the text World, Good day, instead of just World starting at 10AM UTC on and ending 13(1PM) UTC.įirst we pause the running DAG, change World to World, Good day in your sample_dag.py and then run the commands shown below.ĭocker-compose -f docker-compose-LocalExecutor.yml down Our DAG would have run a few times by now. In our case, if a row corresponding to a given id exists in sample.output_data it will be updated, else a new record will be inserted into the sample.output_data table.

This is a postgres feature that allows us to write UPSERT (update or insert) queries based on a unique identifier(id in our case).

You can visualize the backfill process as shown below. How can I manipulate my execution_date using airflow macros ? How can I modify my SQL query to allow for Airflow backfills ? Most ETL orchestration frameworks provide support for backfilling. you may want to add an additional column and fill it with a certain value in an existing dataset.you might realize that there is an error with your processing logic and want to reprocess already processed data.a change in some business logic may need to be applied to an already processed dataset.This is a common use case in data engineering. Backfilling refers to any process that involves modifying or adding new data to existing records in a dataset.

0 kommentar(er)

0 kommentar(er)